EuroSTAR 2021: Working with teams to improve quality

EuroSTAR 2021 is over and there is so much to reflect after it. Back in March, I helped out and I even described the process that I followed. I participated on EuroSTAR with a talk around the "engage" theme. What better than talk about engaging in our own teams in order to improve quality from multiple perspectives?

I started with this question: "Improving quality is a doomed project... or is there a way out?".

During the talk I enumerated some real exercises tthat we've done to overcome this challenge and that you can perhaps try out in your team. In this article I won't go into the details about each one of these exercises; maybe I'll leave that to another post. However, I want to share a bit some thoughts on how to engage, on how we can split that into 3 steps discussed ahead.

Engaging

Making a talk around the "engage" theme made me reflect for a while. Well, that is always a good thing :)

I looked back and thought a bit about some activites I got involved in one team.

First, what do we mean by engage? We may think on it as a way of reaching out someone in order to make something happen. However, this may be quite a restricted vision of it. Of course engaging has a goal but we want that to become a shared goal, something compelling, that touches our heart and soul. That is like the fuel to ignite something bigger. There are many different ways of engaging and pursuing that goal, that we'll see ahead.

During my talk, I tried to make a connection between a well-known good pattern for writing test automation code "3 A's" (Arrange, Act, Assert) and one that we could try applying in our teams: Acknowledge, Act, Assess.

Acklowledge

Acknowledge is about understanding where we stand, what are our blockers for achieving higher quality in the products we're making, deploying, and supporting.

How are we turning ideas into value and is that value being cared for on production? The best way is to engage to initiate a discussion and a brainstorming about the whole software lifecycle.

Who is involved, when, how, and how deep? What is the flow of information and processes? Where is the knowledge (tricky question)? What are the artifacts we're making and for what purpose? Who is getting blocked, how and when? Drawing our overall process and pipeline can be quite useful and insightful; doing it together brings additional clarification and visibility about some main blockers right away.

Visualizing some aspects of how we are working and having the time to reflect is key. Doing that as a team is essential. This exercise was detailed on a previous post.

In the end, we have a set of actions, hopefuly prioritized.

Act

Act is about making sure those actions get done. How we get them done is actually something interesting. We can act by doing, but we can act by exemplifying, act by spreading the love about a certain topic (e.g., unit testing), act by increasing knowledge or bring awareness about something.

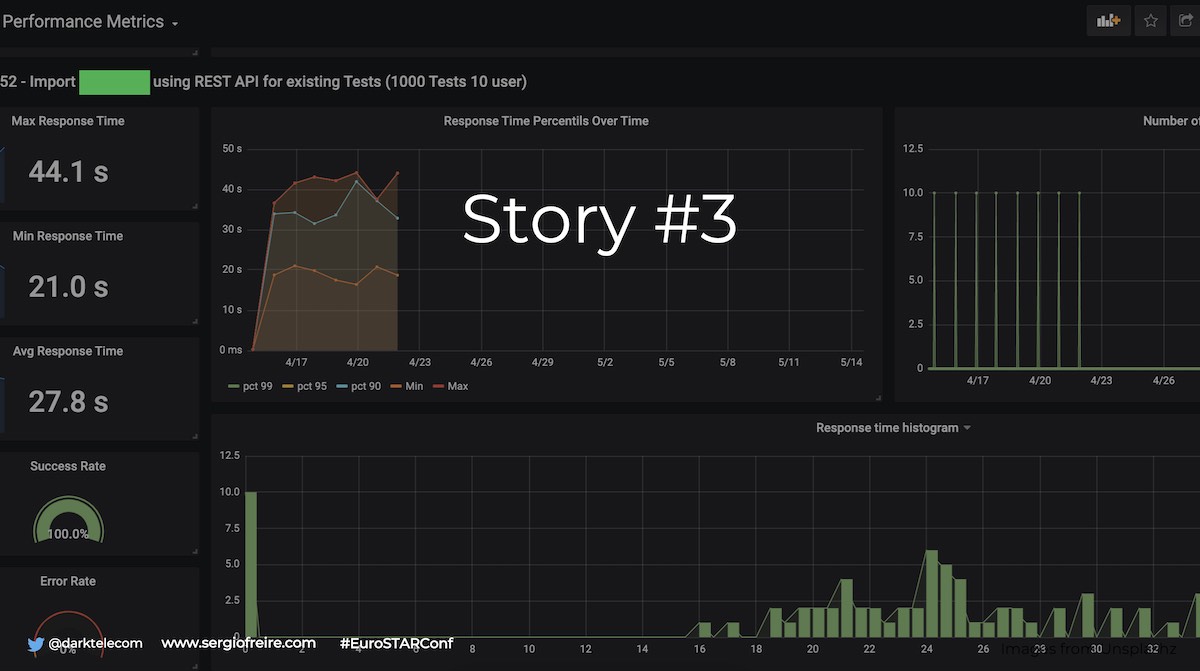

But one way of acting, that for me is one of the most interesting ones, is act by engaging to give opportunities so people can shine. We had a developer within the team that wanted to make a shift to test automation; we knew it, so this colleague was given the opportunity to address one key concern we had related to performance. This person, with the help of the team, was help to get an environment up tracking performance on a continuous base, helping out the whole team.

Assess

Every thing we do needs continuously to be improved. Sofware and quality are not static. Teams are not static. The world isn't static. Therefore we need to always keep reflecting about what we're doing and think on ways o improve it further. Sometimes, we may even have to give a step back and discard some technique, process, tool, artifact, whatever.

In this team we worked, from continuous discussions we had it was clear that we need to improve collaboration and shared understanding. Therefore, we tried out ensemble programming (also known as "mob" programming). And that was a win for us. But we also learned we needed to fine tune it and that's what we did and keep doing. We have to adapt practices, because people are not APIs; we are humans.

So can we improve quality?

In my experience, quality can always be improved. There's no specific technique, process or tool to achieve it. Problems usually are related to lack of knowledge, lack of true collaboration, and lack of proper tooling.

We need to start by acknowleding where we stand, to identify the items we need to address that can benefit the team as a while, and prioritze them.

We then need to actually do something about those; we need to act, act by doing and act by exemplifying, and also act by empowering others. Finally but not least, we need to always evaluate our progress, because we need to be able to adapt and correct our route as soon as possible.

Improving quality is not a straight road. It's a route, where may need to turn at different places, at different moments. Sometimes we may event have to stop or take a step back.

We need to seed knowledge and care it by collaborating within the team. Because knowledge grows better when you care for it; whenever the team cares for it. And as with any seed, if you can depict, use or even build tools that can help you along the way to be more productive.. the better!

You may be planting knowledge. But your crop will be... quality improvement!

Questions

During my presentation, several questions came. I think they are quite interesting and I wanted to share with you my perspective that may help others out there, or even make you reflect a bit.

You shared some experiences and exercises that people can try in order to improve how they work. What was the one that had the most impact? Did any of them surprise you?

All the exercises made with and within the team had impacts. Advocating continuously about unit testing, leveraged by real example-based workshops made quite a difference on improving the number of unit tests and related coverage, and the confidence. However, changing the way people work/collaborate by adopting ensemble programming sessions increased not only confidence but also shared understanding (i.e., knowledge). Ensemble sessions were a surprise because they represented quite a change from the way we were working before, mostly based on solo programing + pull requests + testing a bit aside. I thought it would be quite hard but it wasn't and people got satisfied. As mentioned during the talk, we use a mix: ensemble programming, pair programming (+ a tester), and solo programming. Most of the work gets done through these ensemble sessions though.

What do you mean by "untrustworthy things to test?" Could you give an example?

In the testing debt quadrants, one of the quadrants is aimed for those things that we cannot really trust. And why wouldn't we trust? Because we may lack information Imagine a product that integrates somehow with another product, that you usually don't test. That integration can be leveraged on a API but it can also make use of some shared CSS for example. You may also not understand fully the use cases of the external product, so certain parts of how that integration becomes used is unknown. Therefore, thinking on a more end-to-end user journey, bits of your product may be used in ways that you didn't foresee. Other examples could include the fact that you don't have a representative test data, or maybe your data is not big enough.

How could we improve QA/Dev relationship?

What would you recommend/advise to testers on how they practice effective collaboration / engagement with the dev team when dev team is actually in the middle of building system?

That's an excellent and recurring question but we can generalize it for any other relationship within the team. Between devs<>testers, first we need to talk a bit about our blockers... as a whole, and for each one of us. Doing some exercises together is quite helpful because it makes people talk about a certain topic and start having a shared understanding on the concerns and challenges. At that time, people we'll start offering to address some of these challenges, no matter the role they have. We hear a lot about "quality is a shared responsibility"; that's true but getting to the point where people understand it requires working on it daily, on every aspect that we do. Therefore it's important to start having a more true ownership about what we build and how we build, deploy, and operate it. We should avoid having conversations where a certain quality aspect is assigned to a specific person. We want devs to excel in what they do and how they do, removing them from the burden of dealing with major issues; testers can help making that happen. Whenever a dev is working on a story (well, it's only that dev but I'm simplifying it), that code gets tested later on and then is sent back a few times... well, we can take that moment to say "well, instead of sending this back all over again, let me try help out next time and as soon as you start working on the story, ping me and I'll join you to help clarify things from the start". The whole idea is to make the tester not the "bad news messenger" but instead "the window opener", that will show what behind it and agree about the way to move forward on the world behind that window.

How do you convince devs to get testers into such pair programming sessions? I often hear things like "testers slow us down during pair sessions...I don't have time to explain stuff to the tester."

Testers are invited to these sessions. Most times they are present, some times they may not be. Testers engagement also depends on what is being done: if it's a more coding related aspect, maybe the tester does something else. The idea is to have a kind of open format, where testers can join... and if they are not there, devs can easily ping them to ask for their insights. Having a open stage is a good thing. To get testers into these sessions in our case was not that hard. Some time ago we had concluded that we needed to improve dev<>testers collaboration, even while the team was working mainly using PRs (pull requests). Back then, we worked with devs to make them more open to invite testers to help out giving feedback early. This took a bit of time to get it rolling but after some time devs appreciated becaused they saw less stories being sent back for fixing. Moving from that point where some collaboration was already going on, having testers in the ensemble sessions was natural; however, as mentioned earlier, they don't need to stick all the time.

How hard was it to form the inital groups of testers and developers? Did all go smooth or were there some starting probems (and if so how did you solve them)?

It went smooth AFAIK. These groups are not fixed. We tried to have some balance within the groups, so we don't have all experts in one group and all juniors in a separate one.

Thanks for reading this article; as always, this represents one point in time of my evolving view :) Feedback is always welcome. Feel free to leave comments, share, retweet or contact me. If you can and wish to support this and additional contents, you may buy me a coffee ☕