Model-based and sequential testing: a brief comparison

In this post I will do a brief comparison between "traditional" (i.e. sequential) automated tests/checks with MBT (Model-Based Testing), using some concrete examples including code, to reflect about it.

I made my code and my findings available as open-source in this GitHub repo. Feel free to checkout the code, fork it, run it, play with it, whatever :)

If you want, you can skip the details and jump to the conclusions.

Background

As part of Automation Week, Ministry of Testing challenged testers to address some problems related to test automation; these would include implementing automated checks/tests for the UI of a dummy booking platform.

The idea would be to share the problems, the approach, the learnings with the community. Selected reports would be able to present during Test.Bash('Online') 2020, and I was one the lucky ones to do so.

For me this was an opportunity to learn Python, even though I've made some limited open-source contributions in the past. It was also a way to explore Model-Based Testing (MBT) further. My goal was also to find use cases and limitations, pros and cons (?) of MBT.

Overview of the Web UI challenges

Next follows a description of the UI challenges for the sample website application Restful Brooker Platform kindly provided by Mark Winteringham / Richard Bradshaw. As you'll see, challenges are pretty straightforward. The last one requires a bit more care to implement though.

Challenge 1:

Create an automated test that completes the contact us form on the homepage, submits it, and asserts that the form was completed successfully.

Challenge 2:

Create an automated test that reads a message on the admin side of the site.

You’ll need to trigger a message in the first place, login as admin, open that specific message and validate its contents.

Challenge 3:

Create an automated test where a user successfully books a room from the homepage. You’ll have to click ‘Book this Room’, drag over dates you wish to book, complete the required information and submit the booking.

Approach for implementing automated tests

I decided to follow two different approaches, so I could compare one another:

- the first one using pytest, with "standard" automated checks made of sequential actions/expectations

- another using Model-Based Testing (MBT), using AltWalker which in turn uses GraphWalker

Both make use of the Page Objects Model (POM) facilitated by the pypom library. As pages can have different sections/regions, we can abstract those precisely as classes inherited from the Region class. This will make code cleaner and more readable.

class FrontPage(Page):

"""Interact with frontpage."""

_admin_panel_locator = (By.LINK_TEXT, "Admin panel")

class ContactForm(Region):

_contact_form_name_locator = (By.ID, "name")

...

In both implementations, you'll see references to a faking data library. I've combined controlled randomization of data to provide greater coverage; this is especially valuable in the MBT implementation as the model can be exercised automatically "indefinitely" (to a certain point).

Standard tests using pytest

This implementation is what I would call the "traditional" (i.e. common way) of implementing automated tests, where you implement a set of sequential actions and one, or more, asserts/expectaction checks.

The following code is an example one such tests (you can find more in in the file standard_pom_tests.py).

def test_contact_form_successful(self):

page = FrontPage(self.driver, BASE_URL)

page.open()

page.contact_form.wait_for_region_to_load()

page.contact_form.fill_contact_data(name="sergio", email="sergio.freire@example.com", phone="+1234567890",

subject="doubt", description="Can I book rooms up to 2 months ahead of time?")

self.assertEqual(page.contact_form.contact_feedback_message,

f"Thanks for getting in touch sergio!\nWe'll get back to you about\ndoubt\nas soon as possible.")

In this case data was initially hard-coded. However, by using faker library we can create a custom test data provider for the contact and our test method can be rewritten as:

def test_contact_form_successful(self):

page = FrontPage(self.driver, BASE_URL)

page.open()

page.contact_form.wait_for_region_to_load()

name = fake.valid_name()

email = fake.valid_email()

phone = fake.valid_phone()

subject = fake.valid_subject()

description = fake.valid_description()

page.contact_form.fill_contact_data(

name=name, email=email, phone=phone, subject=subject, description=description)

self.assertEqual(page.contact_form.contact_feedback_message,

f"Thanks for getting in touch {name}!\nWe'll get back to you about\n{subject}\nas soon as possible.")

Using a controlled custom test data generator can be quite helpful as we'll see ahead. It's also a way of handling test data, as long as it is not fully random (as you may want to reproduce things afterwards).

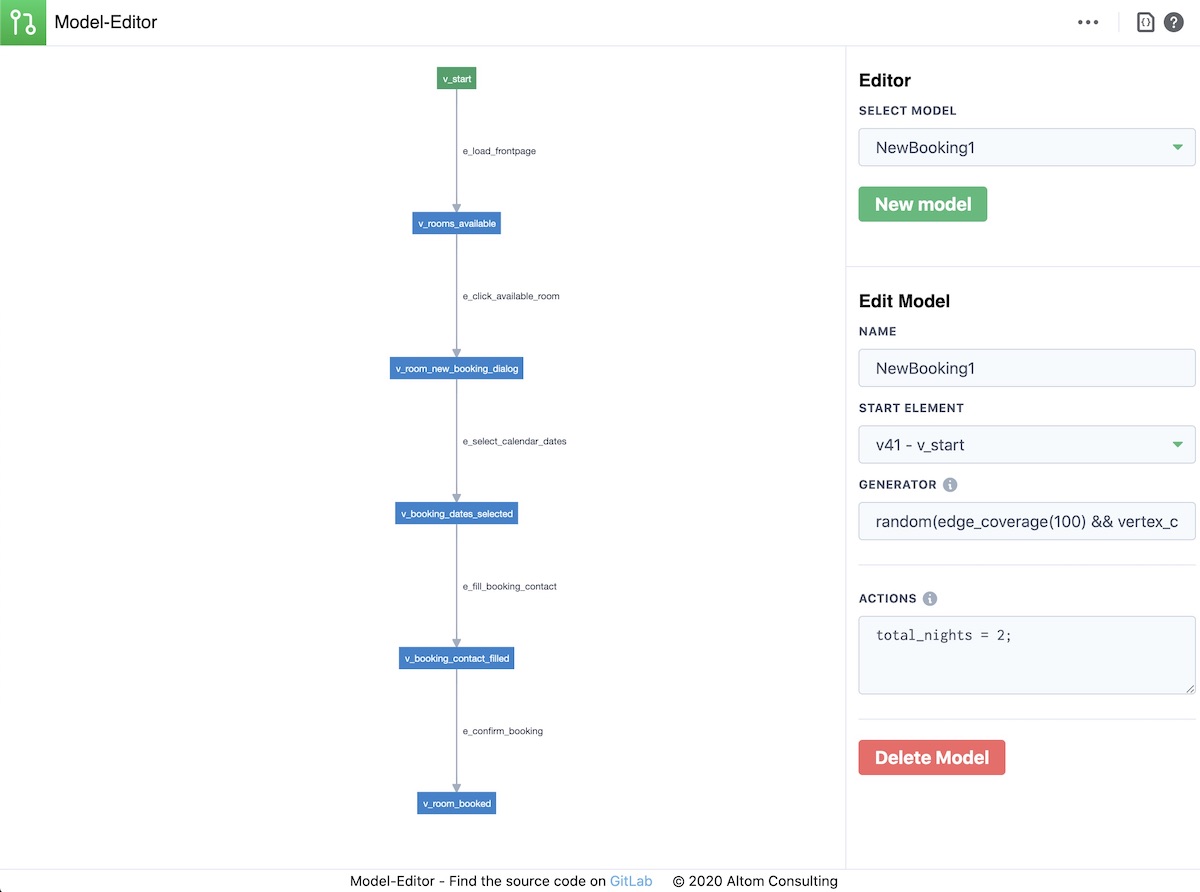

Model-based tests using AltWalker and GraphWalker

With MBT, usually we have the model (either made using a visual model editor or from the IDE) and the underlying code. In our case, models are stored in JSON format under the models directory. The test code associated with vertices and edges is implemented in the file test.py.

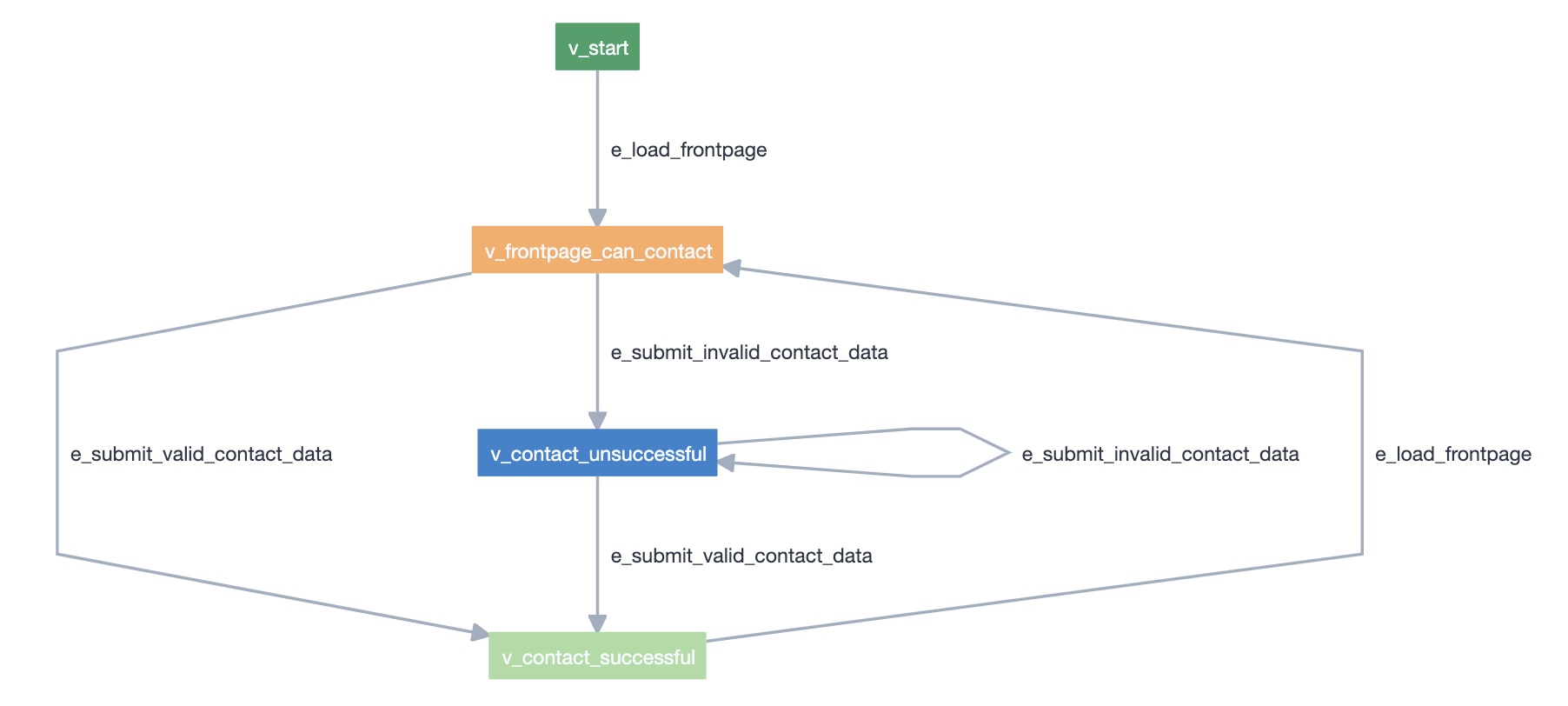

Using Model Editor (or GraphWalker Studio), we can model our application using a directed graph. In simple words, each vertex represents a state and each edge is a transition/action made in the application. Tests are made on the vertices/states.

Modeling is a challenge in itself and we can model the application and how we interact with it in different ways. Models are not exhaustive; they're a focused perspective on a certain behavior that we want to understand. MBT provides greater coverage and also a great way to visualize and discuss the application behavior/usage.

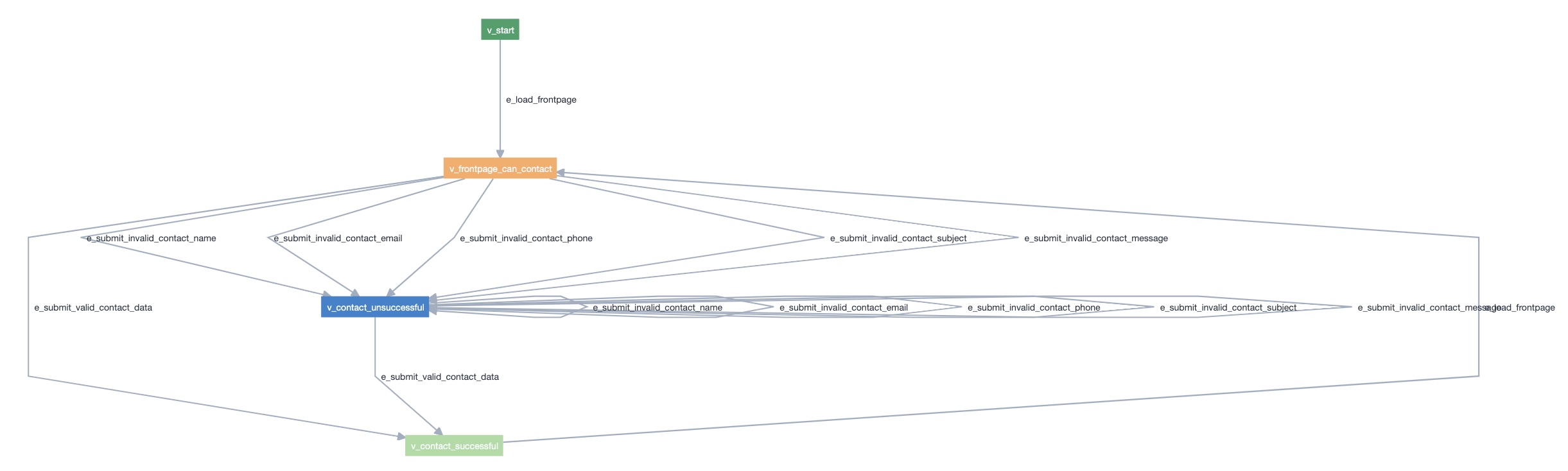

Addressing challenge 1 with MBT

For the first challenge (i.e. contact form submission), we start from an initial state, from where we just have one possible action/edge: load the frontpage. Then we can consider another state, where the frontpage is loaded and the contact form is available. Two additional states are possible: one for a successful contact and another for an unsuccessful contact submission. We can go to these states by either submitting valid or invalid contact data.

One curious thing comes out from the model: after a successful contact, we can only make a new contact if we load/refresh the frontpage again. Was this an expected behavior? Well, we would have to discuss with the team.

With GraphWalker Studio, we can run the model offline and see the paths (sequence of vertices and edges) performed.

The code for each vertex and edge is quite simple as seen ahead.

Example of e_submit_valid_contact_data code, showcasing usage of faker library:

def e_submit_valid_contact_data(self, data):

page = FrontPage(self.driver, BASE_URL)

page.contact_form.wait_for_region_to_load()

name = fake.valid_name()

email = fake.valid_email()

phone = fake.valid_phone()

subject = fake.valid_subject()

description = fake.valid_description()

data['global.last_contact_name'] = name

data['global.last_contact_email'] = email

data['global.last_contact_phone'] = phone

data['global.last_contact_subject'] = subject

data['global.last_contact_description'] = description

page.contact_form.fill_contact_data(

name=name, email=email, phone=phone, subject=subject, description=description)

Example of v_contact_successful code:

def v_contact_successful(self, data):

page = FrontPage(self.driver, BASE_URL)

name = data['last_contact_name']

subject = data['last_contact_subject']

self.assertEqual(page.contact_form.contact_feedback_message,

f"Thanks for getting in touch {name}!\nWe'll get back to you about\n{subject}\nas soon as possible.")

If we were using just this model, then the contact details could be temporarily stored as regular object variables (each model, in code side, is an object). However, that would limit us in case we want to have shared states between models, as we'll see ahead.

def e_submit_valid_contact_data(self, data):

page = FrontPage(self.driver, BASE_URL)

page.contact_form.wait_for_region_to_load()

# variables could be saved in python side, in this object, but we'll need to share them between models which use a different class & object

self.name = fake.valid_name()

self.subject = fake.valid_subject()

...

One can make this model a bit more detailed and complex, by making explicit edges/transitions for the process of submitting one field as invalid. This makes the graph harder to read though and it will only be relevant if we want to distinguish those cases.

But we have to ask ourselves? Is it worth implementing this distinction? What are we trying to achieve/model exactly?

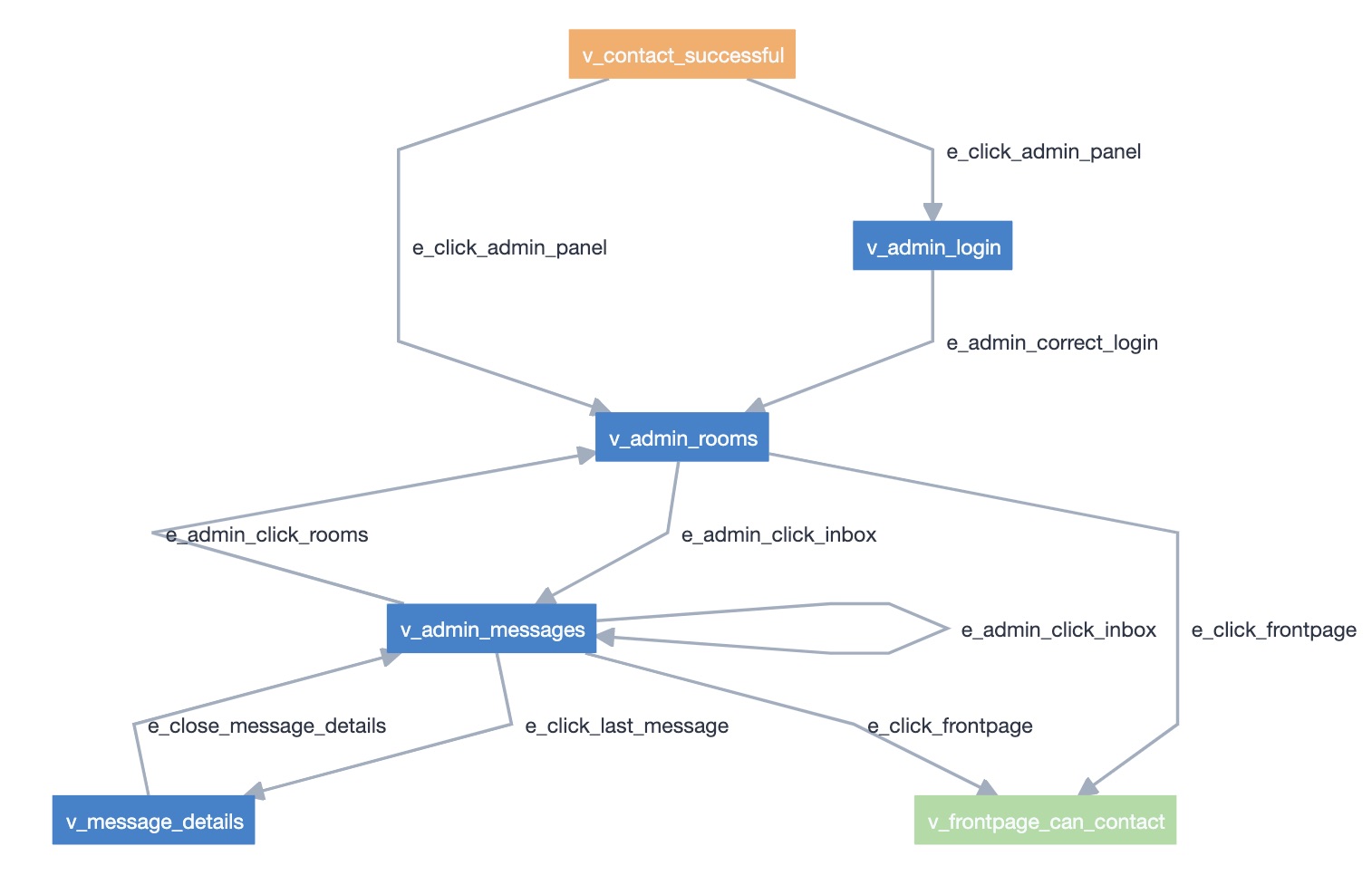

Addressing challenge 2 with MBT

In order to validate if the contact/message appears correctly in the admin page (2nd challenge), we start from a vertex/state related to a successful contact. Note that this vertex has a shared state with the first model shared earlier, which allows AltWalker/GraphWalker to jump from one model to the other one.

We can then go to the admin panel, authenticate if needed, go to the inbox/messages section, open and check the details of the last contact message. We can see several edges corresponding to actions that can be done, allowing us to transverse the graph and thus go to different application states.

Some edges have "actions" defined in the model, to set an internal variable that can be useful later on, in case we want to conditionate the paths somehow.

Example of action defined in e_admin_correct_login:

logged_in=true;

Some edges have "guards", so they're only performed if those guard conditions are true. In the following example, the edge is only executed if not yet logged in (which uses a variable set before in an action).

Example of guard defined in e_click_admin_panel (from v_contact_successful to v_admin_login):

logged_in!=true

In this exercise and on the previous one, we take advantage of using model variables (e.g. last_contact_name, last_contact_subject) to temporarily store information about the last contact so we can make the asserts on the vertex later on. The contact data details are filled in code side and are populated back to model variables used for this purpose. Passing data between edges/vertices code and the model can be done using an optional argument (e.g. "data") on the respective methods. Variables will be serialized/deserialized (use it wisely).

def e_submit_valid_contact_data(self, data):

...

data['global.last_contact_name'] = name

data['global.last_contact_email'] = email

data['global.last_contact_phone'] = phone

data['global.last_contact_subject'] = subject

data['global.last_contact_description'] = description

...

On model side, variables can be local to each model or they can be global. Global variables are useful in case we need to access some information from another model. Having a ton of global variables can be hard to manage though.

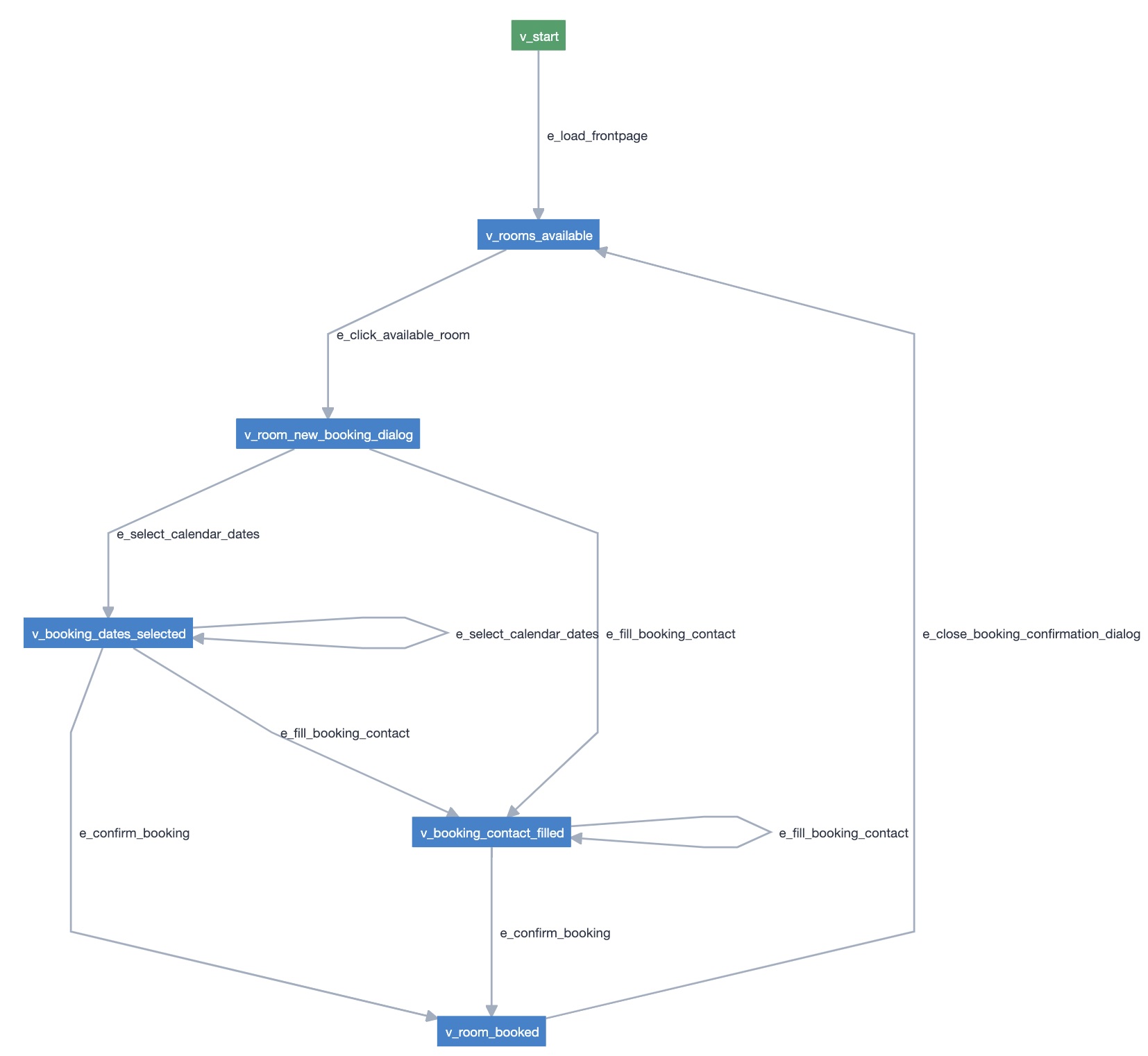

Addressing challenge 3 with MBT

Challenge 3 (i.e. new booking) can also be addressed using a simple model, having a variable named total_nights, defined at model level, for controlling the intended number of nights to book.

The previous model depicts a sequential set of actions and corresponding states, so it mimics a typical sequential automated test as seen in the pytest implementation. Even though feasible, and as there's only one path in the graph, this model, as it is, doesn't provide exceptional value except that it turns visible our own model of the system.

Note: another possible model could deal with the fact that the contact and date selection don't need to happen in sequence, and also provide the ability to jump back to the initial page. Well, many variations can be done depending on what we want to verify and the risks we have in mind.

Conclusions

Traditional, sequential tests can be easy to implement and read (its code). However, they can become harder and harder to read (depending on code quality aspects). More, these tests are good for checking happy paths and doing negative testing. However, their focus coming from the hard-coded path that they exercise, is also their limitation.

With MBT I'm not focused on simple happy paths or negative tests. Instead, I'm trying to look at my feature from different angles, like "all" possible ways of interacting with it. Therefore, coverage with MBT increases because many more paths are exercised. By walking though those paths, we can expose hidden risks that otherwise we would miss.

Modeling is a process that in itself is also an uncovering mechanism for unknowns. During the exercise, I could see that certain actions could only be performed if I was at a given "state"... and that could make sense or not. For example, to make a new contact request, I had to refresh the front page. There, while modeling, as a process that we iterate over, we are also performing exploratory testing. Having models can be beneficial for discussion, even if they do not have automation code linked to them.

Surprisingly, the code related to MBT is simple and clean. At the start I had some doubts about it. I would say that one important challenge is related to how to pass information between models, in case we want to have multiple models interacting with one another, through shared states. By combining controlled test data generation with MBT, we can keep our model being exercised until an error arises or a certain amount of time has elapsed. This is a great way to spend time with automation code: we're not running the same tests, we're running many different tests/paths along with different data.

Please find ahead a sum up of my thoughts on traditional tests vs model-based tests. It's not an exhaustive comparison but it's a starting point for additional learning iterations.

Traditional, sequential automated tests/checks:

- are simple and focused

- are good for happy path and negative testing

- have restricted coverage, even if we use data-driven testing

- can become hard to visualize (we need to infer the "visual model" by looking at the code)

Model-Based Testing:

- Focused on a model, not on a concrete example

- Doesn’t replace "traditional" tests/checks

- Greater coverage, beyond happy/negative tests

- Visualization fosters discussion/reflecting

- Ability to reuse and combine models

- It’s a way of performing exploratory testing!

MBT: Challenges and Tips:

- Many ways to model: keep it simple!

- Write models, even if you don’t automate them

- Increase coverage further with test data randomization

- Temporary data management (model vs code)

- Harder to debug

Learn more

Thanks for reading this article; as always, this represents one point in time of my evolving view :) Feedback is always welcome. Feel free to leave comments, share, retweet or contact me. If you can and wish to support this and additional contents, you may buy me a coffee ☕